Pre-trained Low-light Image Enhancement Transformer

Graphical Abstract

Abstract

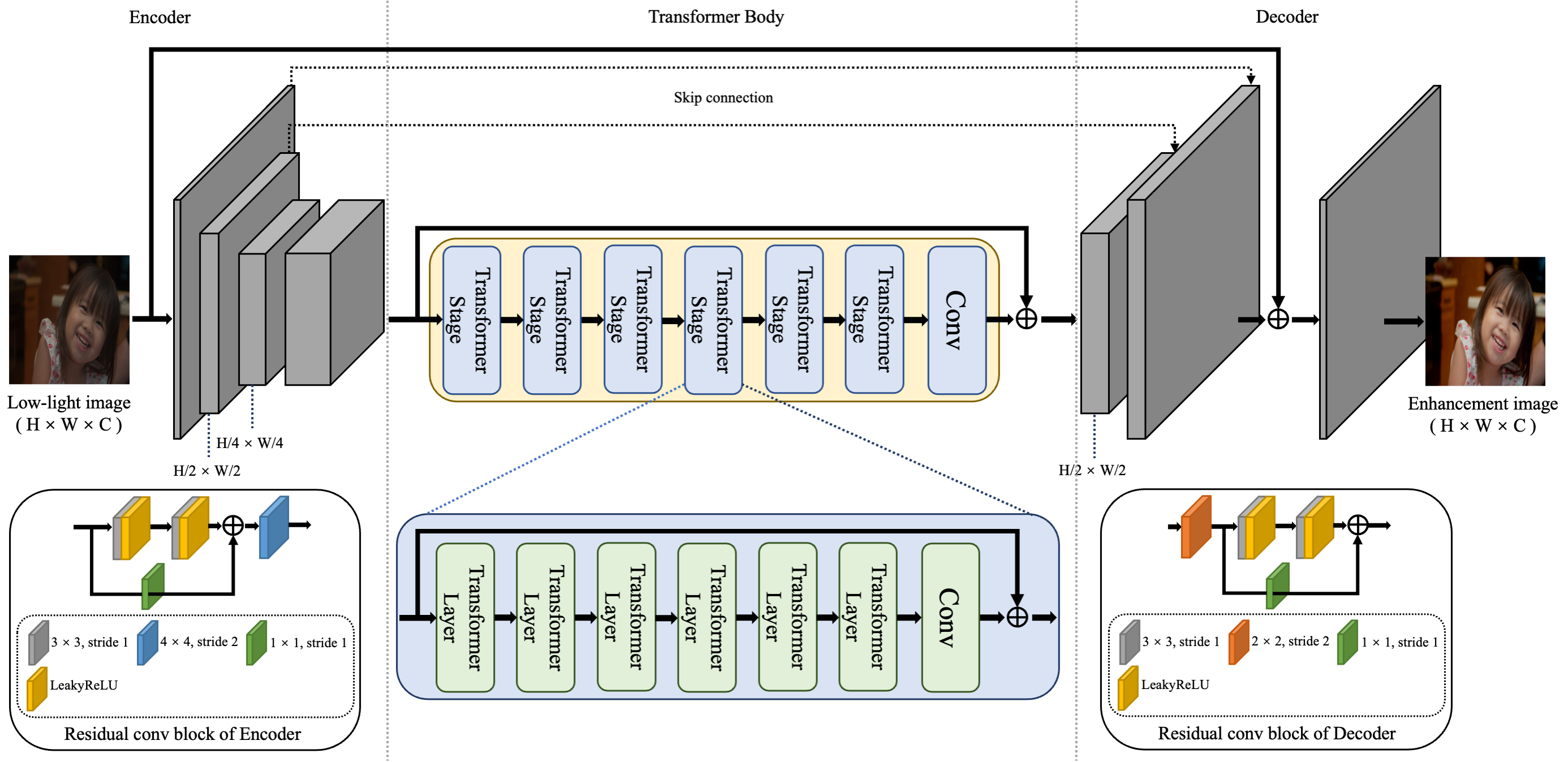

Low-light image enhancement is a long-standing low-level vision problem because low-light images frequently have serious aesthetic quality flaws. Current low-light image enhancement methods based on deep neural networks have achieved impressive progress on this task. Unlike the mainstream CNN-based methods, we propose an effective low-light enhancement solution inspired by the Transformer that has demonstrated impressive performance in various tasks to address such problems. The key of this solution includes an image synthesis pipeline and a powerful Transformer-based pre-trained model named LIET. Precisely, the image synthesis pipeline consists of illumination simulation and realistic noise simulation, which can simulate more realistic low-light images to alleviate the bottleneck of data scarcity. LIET consists of a pair of streamlined CNN-based encoder- decoders and a Transformer body, which can effectively perform global/local contextual feature extraction with relatively low computational cost. We extensively evaluate the proposed approach through extensive experiments, and the results demonstrate that our solution is highly competitive with state-of-the-art methods. All the codes will be released soon.